[WIP] Planning with MCTS: Enhancing Problem-Solving in Large Language Models

Yang Yan, Yu Lu, Lizhi Ma, Zhenzhong Lan

link: https://openreview.net/forum?id=sdpVfWOUQA

Planning with MCTS: Enhancing Problem-Solving in Large Language Models

Part 1: Author's Summary

(1) A: Good afternoon, everyone! Today we have a presentation from one of our researchers about their recent paper on enhancing problem-solving capabilities in Large Language Models. Let's give the floor to our author for a 7-minute summary of their work.

(2) Author: Thank you for the introduction. I'm excited to share our research on "Planning with MCTS: Enhancing Problem-Solving in Large Language Models."

Despite recent advances in Large Language Models, we've observed persistent limitations in their ability to solve complex reasoning problems. LLMs often struggle with maintaining logical consistency and handling long-term dependencies, particularly in multi-step problems. While techniques like Chain-of-Thought prompting have shown promise, they still fall short when it comes to generating and following coherent, overarching plans.

Our core innovation is the application of Monte Carlo Tree Search to the planning phase of LLM problem-solving. Unlike previous approaches that apply MCTS directly to solution search, we uniquely integrate it into the planning process.

Here's how our approach works:

First, MCTS explores the space of possible plans, guided by specialized LLM-powered agents that evaluate plan quality. These agents assess properties like logical consistency and feasibility.

Second, once an optimal plan is identified, we provide it to the LLM for step-by-step execution.

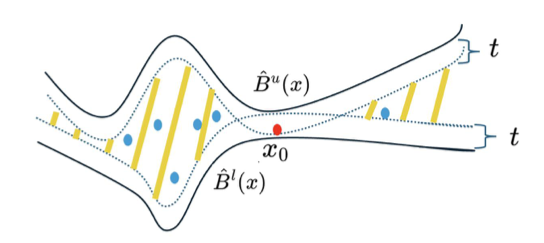

In Figure 1 on page 2, you can see a comparison of our approach with existing methods. The figure illustrates how standard Chain-of-Thought reasoning can suffer from cumulative errors, while our MCTS-enhanced planning approach generates higher-quality plans that lead to better problem-solving performance.

We evaluated our method across diverse benchmark datasets, including arithmetic tasks (GSM8K, AddSub, MultiArith, SVAMP, and SingleEq), commonsense reasoning (CommonsenseQA), symbolic reasoning (Last Letters), and gaming reasoning (Object Tracking).

Our results, shown in Table 1 on page 6, demonstrate that our approach improves problem-solving accuracy by an average of 40.59% compared to zero-shot Chain-of-Thought prompting. We found particularly strong improvements on arithmetic tasks, with improvements of 18.67% on MultiArith, 19.24% on SingleEq, and 17.79% on AddSub.

Another interesting finding, detailed in Table 4 on page 8, is that we can use smaller models for MCTS planning and larger models for execution while maintaining high performance. This offers significant computational efficiency without sacrificing accuracy. For example, using Qwen2.5-1.5B-Instruct for planning and Qwen2.5-72B-Instruct for execution achieved 92.80% accuracy on GSM8K, compared to 94.62% when using the large model for both tasks.

Our research has several key implications:

- It addresses a critical gap in current approaches by enhancing planning capabilities

- It demonstrates that search algorithms can significantly improve LLM reasoning

- It offers insights into the interplay between planning and execution in LLM problem-solving

- It provides a computationally efficient approach through model size optimization

In conclusion, our work opens new avenues for developing more robust and efficient AI systems capable of tackling complex real-world problems, with potential applications in automated reasoning, decision support systems, and AI-assisted scientific discovery. [Section 1 & 2]

(3) A: Thank you for that comprehensive summary. Now, let's open the floor for questions and discussion from our lab members.

Part 2: First Round of Discussion

(4) Junior: Thanks for the presentation. I'm still getting familiar with some of the concepts. Could you explain what exactly Monte Carlo Tree Search is and why it's particularly well-suited for this planning phase in LLMs?

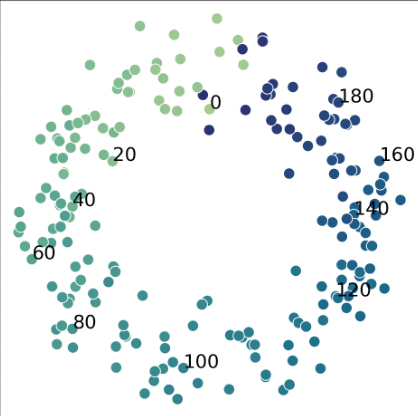

(5) Author: Of course. Monte Carlo Tree Search, or MCTS, is a heuristic search algorithm that's particularly effective for exploring large search spaces. It's famously used in game-playing AI, such as AlphaGo, which defeated the world champion in the game of Go.

MCTS operates through four main phases: selection, expansion, simulation, and backpropagation.

- Selection: Starting from the root node (which represents an initial plan), we traverse the tree by selecting the child node with the highest Upper Confidence Bound (UCB1) value. This balances exploration (trying new paths) and exploitation (following promising paths).

- Expansion: When we reach a leaf node, we add a new node to the tree. In our case, this represents a modified version of the parent node's plan.

- Simulation: Instead of playing out random games as in traditional MCTS, we use specialized LLM agents to evaluate the quality of the plan, checking for logical consistency and feasibility.

- Backpropagation: The evaluation results are backpropagated up the tree, updating the value estimates of all nodes along the path.

MCTS is well-suited for planning in LLMs because it efficiently explores the vast space of possible plans without having to evaluate all possibilities. It gradually focuses on promising areas while still maintaining some exploration of alternatives. This is crucial when dealing with the combinatorial explosion of possible plans in complex reasoning tasks. [Section 2.2]

(6) HoL: I'm interested in the design choices behind your framework. Could you elaborate on how you formulate the problem of plan generation as a search problem? Specifically, what does a node represent in your search tree, and how do you determine the search space?

(7) Author: Great question about the search space formulation. In our framework, each node in the MCTS tree represents a specific plan, which is a sequence of natural language instructions that guides the LLM's reasoning.

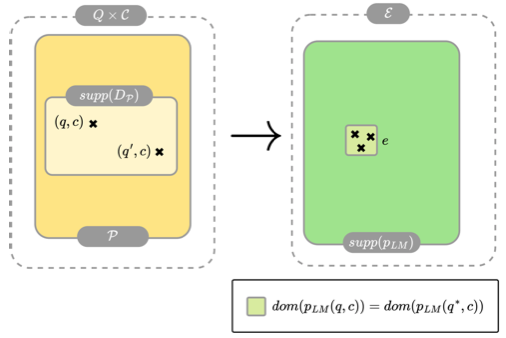

We formulate the problem using a probabilistic framework as described in Section 2.1. We view problem-solving with LLMs as generating a solution Y given a problem X and a context C, represented as a conditional probability P(Y|X, C). We decompose this into two parts:

- P(X|C): Generating a sequence of reasoning steps based on the context

- P(Y|X, Cplan): Generating the solution given the reasoning steps and the plan

The search space Π represents all possible plans. The root node of our MCTS tree is an initial plan generated by prompting the LLM with the problem description. Each child node represents a modified version of its parent plan, with variations in steps, ordering, or detail level.

For the expansion phase, we use the LLM itself to generate variations of existing plans. This ensures that the plans remain natural and executable by the same LLM during the solution phase.

The key insight is that by explicitly separating planning from execution and systematically exploring the plan space with MCTS, we can find more effective plans than what the LLM would generate implicitly during its reasoning process. [Section 2.1 & 2.2]

(8) Dr. P: I'd like to understand more about your evaluation methodology. In Table 1, you compare your approach with zero-shot Chain-of-Thought and plan-and-solve approaches. Could you explain the experimental setup in more detail? Specifically, how did you ensure a fair comparison across these different methods, and what metrics did you use to evaluate plan quality versus solution quality?

(9) Author: For our experimental evaluation, we aimed to create a controlled comparison that isolated the effect of MCTS-enhanced planning from other factors.

Our experimental setup involved:

- Datasets: We used 8 diverse benchmark datasets spanning arithmetic, commonsense reasoning, symbolic reasoning, and gaming reasoning to test generalizability.

- Models: We employed two state-of-the-art open-source LLMs: LLama 3.1 (8B parameters) and Qwen 2.5 (with 0.5B, 1.5B, and 7B parameter variants). We used the SGLang platform for hosting and interacting with these models.

- Baselines: We compared against standard zero-shot Chain-of-Thought prompting and a plan-and-solve approach where the LLM first generates a plan and then executes it.

For metrics, we primarily used accuracy—the percentage of correctly solved problems—as our main evaluation metric across all datasets. This provides a direct measure of problem-solving performance.

To ensure a fair comparison:

- We used identical prompts across methods wherever possible

- All methods operated in a zero-shot setting without task-specific fine-tuning

- We controlled for model size and configuration across approaches

- For the CoT baseline, we used established code and data from previous research

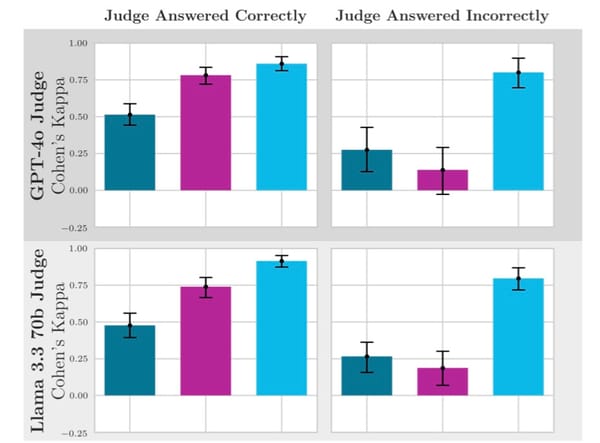

In terms of evaluating plan quality versus solution quality, we didn't explicitly separate these metrics in our main results. However, in our ablation studies (Table 3 on page 7), we tested different evaluation agents for MCTS, including feasibility and logical consistency evaluators. This helped us understand how different aspects of plan quality contribute to overall solution performance.

One limitation we acknowledge is that we focused on final solution accuracy rather than intermediate measures of plan quality. Future work could explore more fine-grained evaluation of the plans themselves. [Section 3.2 & 3.3]

(10) Senior: I'm curious about the novelty of your approach. There seems to be related work applying MCTS to LLMs in different contexts. Could you clarify what makes your application of MCTS to the planning phase particularly innovative compared to existing approaches?

(11) Author: You've touched on an important point about positioning our work within existing research. The key novelty of our approach lies in how and where we apply MCTS in the LLM problem-solving pipeline.

Previous applications of MCTS to LLMs, as we discuss in Section 4.3, have primarily focused on using MCTS for solution search within the Chain-of-Thought (CoT) process. For example, some approaches use search algorithms like Breadth-First Search or Depth-First Search to guide the selection of reasoning steps in CoT.

Our innovation is threefold:

- Focus on planning rather than reasoning: We explicitly separate the planning phase from the reasoning/execution phase and apply MCTS specifically to optimize the plan. This contrasts with approaches that use search within the reasoning process itself.

- LLM-powered evaluation agents: We use specialized LLM agents to evaluate plan quality during MCTS simulation, allowing for nuanced assessment of logical consistency and feasibility rather than relying solely on outcome-based evaluation.

- Two-stage pipeline: By generating an optimal plan first and then executing it separately, we create a more structured approach that maintains the benefits of search algorithms while leveraging the reasoning capabilities of LLMs.

This design addresses a research gap identified in Section 4.4: "While MCTS has shown promise in enhancing solution search for LLMs, its application to the planning process remains largely unexplored." Our approach tackles this gap directly.

Additionally, our finding that smaller models can be effectively used for planning while larger models handle execution offers a novel perspective on efficient deployment of these techniques. [Section 4.3 & 4.4]

(12) MML: I'd like to understand the mathematical formulation behind your approach better. In Section 2.1, you present the factorization of the conditional probability P(Y|X,C). Could you elaborate on the assumptions behind this factorization and how it informs your MCTS implementation, particularly the UCB1 formula you use for node selection?

(13) Author: Let me clarify the mathematical foundations of our approach. Our probabilistic framework starts with viewing problem-solving as generating a solution Y given a problem X and context C, represented as P(Y|X,C).

The key assumption we make in Equation 1 is that we can decompose the context C into the problem description (Cproblem) and a plan (Cplan):

P(Y|X,C) = P(Y|X,Cproblem,Cplan)

Then, in Equation 2, we make a conditional independence assumption: once the plan Cplan is given, the solution Y depends on the problem X and the plan Cplan, but not directly on the problem description Cproblem:

P(Y|X,Cplan) = P(Y|X,Cplan)P(X|C)

This factorization allows us to separate planning—generating reasoning steps X based on context C, i.e., P(X|C)—from reasoning/execution—generating solution Y given steps X and plan Cplan, i.e., P(Y|X,Cplan).

For MCTS implementation, we use the standard UCB1 formula shown in Equation 3:

UCB1(node) = Q(node) + C × √(ln(N(parent))/N(node))

Where:

- Q(node) is the average reward of simulations through this node

- N(node) is visit count for this node

- N(parent) is visit count for the parent node

- C is an exploration constant balancing exploration vs. exploitation

The connection between our probability factorization and MCTS is that MCTS is specifically targeting the P(X|C) term—finding the optimal sequence of reasoning steps X given context C. The UCB1 formula helps us navigate the search space of possible plans (reasoning step sequences) efficiently.

During expansion, we generate variations of plans, and during simulation, our evaluation agents assess these plans. The rewards from these evaluations are used to update Q(node) values, which in turn affect the UCB1 scores and guide future search directions.

This mathematical foundation ensures our MCTS implementation is grounded in probability theory while enabling practical search through the space of possible plans. [Section 2.1 & 2.2]

(14) Indus: From an industry perspective, I'm interested in the practical applications and computational efficiency of your approach. You mentioned using smaller models for planning and larger models for execution. Could you provide more details on the computational costs and potential real-world applications where this approach might be particularly valuable?

(15) Author: From a practical standpoint, computational efficiency is indeed a critical consideration for deploying these techniques in industry settings.

Regarding computational costs, our approach offers a significant efficiency advantage through the model size optimization we discuss in Section 3.4. Traditional approaches might use a large LLM (like Qwen2.5-72B-Instruct) for the entire process, which is computationally expensive, especially when MCTS requires multiple evaluations.

Our approach allows for a more efficient distribution of resources:

- Smaller models (like Qwen2.5-1.5B-Instruct) for the planning phase with MCTS

- Larger models (like Qwen2.5-72B-Instruct) for plan execution

Looking at Table 4 (page 8), this configuration achieved 92.80% accuracy on GSM8K, compared to 94.62% with the large model for both tasks. This represents only a ~2% performance drop while potentially offering significant computational savings.

In terms of real-world applications, our approach is particularly valuable for:

- Decision support systems where complex reasoning is required but computational resources may be limited, such as financial planning or medical diagnosis assistance

- Automated reasoning tools for domains like legal document analysis, scientific literature review, or engineering problem-solving

- Educational technology to provide step-by-step problem-solving guidance to students

- Resource-constrained environments like edge devices or applications with strict latency requirements, where our approach could allow for more efficient deployment of reasoning capabilities

- Enterprise environments where cost optimization is important but reasoning quality cannot be compromised

The tradeoff between model size, computational cost, and accuracy that we demonstrate offers a flexible framework that can be tailored to specific industry needs and constraints. [Section 3.4 & 5]

(16) LaD: I'm interested in the benchmark datasets you used. Could you provide more details about these datasets, particularly in terms of their characteristics, diversity, and why they were chosen for evaluating your approach? Also, did you observe any patterns in performance across different types of datasets?

(17) Author: We carefully selected a diverse set of benchmark datasets to evaluate the generalizability of our approach across different types of reasoning tasks. Let me provide more details about these datasets:

- Arithmetic datasets:

- GSM8K: Contains 8.5K grade school math word problems requiring multi-step reasoning

- AddSub: 395 addition and subtraction word problems

- MultiArith: 600 multi-step arithmetic problems

- SVAMP: A challenge set for elementary-level math problems with variations

- SingleEq: Single-equation algebraic word problems

- Commonsense reasoning:

- CommonsenseQA: Multiple-choice questions that require commonsense knowledge

- Symbolic reasoning:

- Last Letters: A synthetic task requiring extraction and manipulation of the last letters of words

- Gaming reasoning:

- Object Tracking: Reasoning about object movements and locations

We chose these datasets because they:

- Cover diverse reasoning types (arithmetic, commonsense, symbolic, and spatial)

- Require different levels of planning complexity

- Have established benchmarks for comparison

- Challenge different aspects of LLM reasoning capabilities

Regarding performance patterns across datasets, we observed:

- Strongest improvements on arithmetic tasks (MultiArith: +18.67%, SingleEq: +19.24%, AddSub: +17.79%) and problem-solving tasks (GSM8K: +11.26%, SVAMP: +13.77%), suggesting our approach is particularly effective for structured, multi-step reasoning problems

- More modest improvements on commonsense reasoning (CommonsenseQA: +6.93%)

- Interestingly, we saw a slight decrease in performance on Object Tracking (-2.66%), suggesting that for certain spatial reasoning tasks, our planning approach might not be as advantageous

These patterns indicate that MCTS-enhanced planning is most beneficial for problems with clear sequential structure and multiple reasoning steps, where having a coherent plan is particularly important. [Section 3.2 & 3.3]

Part 3: Deep Dive Discussion

(18) A: Thank you all for your questions so far. We're now moving into the deep dive portion of our discussion, where we'll explore specific aspects of the paper in more detail.

(19) Dr. P: I'd like to dive deeper into the ablation studies you conducted. In Table 2, you explore the effects of different MCTS parameters like maximum depth and number of rollouts. The results show some interesting patterns, but there seems to be increased uncertainty in performance improvement as the number of rollouts grows. Could you explain this phenomenon and discuss how you determined the optimal parameters for your final experiments?

(20) Author: You've identified an interesting phenomenon in our ablation studies. Let me elaborate on what we discovered regarding MCTS parameters and the uncertainty in performance improvement.

For maximum depth, we observed a generally consistent pattern: increasing the depth improved performance up to a point, after which the gains diminished. This suggests that deeper search trees allow for more thorough exploration of the plan space, but there are diminishing returns. As shown in Table 2, performance for Qwen2.5-7B-Instruct improved from 87.64% at depth 1 to 88.78% at depth 100.

The number of rollouts showed a more complex pattern. Initially, increasing rollouts from small numbers (1-5) yielded significant improvements, as expected. However, as rollouts increased further (10-20+), the uncertainty in performance became more pronounced. For example, with Qwen2.5-7B-Instruct, performance moved from 89.01% (1 rollout) to 89.92% (20 rollouts) non-monotonically.

There are several reasons for this uncertainty:

- Backpropagation implementation: As noted in Section 3.3, we implemented backpropagation in a zero-sum game manner, which might contribute to increased variance at higher rollout numbers. This is because in zero-sum games, values propagate differently than in single-player scenarios.

- Expanding search space: As rollouts increase, MCTS explores a larger portion of the plan space, potentially discovering diverse plans with similar quality but different characteristics.

- Convergence challenges: With more rollouts, the search might struggle to converge if there are multiple near-optimal plans with similar values.

For determining optimal parameters in our final experiments, we used a combination of:

- Grid search: Testing various combinations of depth (1, 3, 5, 7, 10, 20, 50, 100) and rollouts (1, 3, 5, 7, 10, 20)

- Performance plateauing: Identifying where gains diminished

- Computational efficiency: Balancing performance gains against computational costs

Based on these explorations, we typically used depths between 7-10 and rollouts between 10-20 for our main experiments, as these provided a good balance between performance and efficiency.

In future work, we're considering alternative backpropagation strategies and more sophisticated reward functions that might reduce this uncertainty and provide more stable performance improvements with increased rollouts. [Section 3.3]

(21) MML: Let's discuss the evaluation agents you use during MCTS simulation in more detail. In Table 3, you show results for feasibility and logical consistency evaluators compared to your combined approach. Could you elaborate on how these agents are designed, what specific aspects they evaluate, and how their assessments are combined into a final reward signal?

(22) Author: The evaluation agents are a critical component of our MCTS framework, so I'm glad you want to explore them further. These agents are specialized LLM instances tasked with assessing different aspects of plan quality during the simulation phase of MCTS.

For the Logical Consistency Agent, we prompt an LLM to analyze a candidate plan for contradictions or inconsistencies. For example, if step 2 of a plan contradicts information in step 1, the agent would identify this and assign a lower score. The agent examines:

- Internal consistency between steps

- Alignment with the problem statement

- Logical flow from beginning to end

- Potential gaps in reasoning

For the Feasibility Agent, we prompt another LLM instance to assess whether the plan is executable. This agent focuses on:

- Whether steps are concrete and actionable

- If necessary information is available at each step

- Whether the plan would lead to a solution if followed

- If the plan is appropriate for the problem type

Each agent provides:

- A numerical score (typically between 0 and 1)

- Textual feedback explaining the assessment

For the combined approach (our final method), we merge these evaluations using a weighted average:

Reward = α × FeasibilityScore + β × LogicalConsistencyScore

where α and β are weighting parameters (in our implementation, we typically used α = β = 0.5 for equal weighting).

The textual feedback from the agents is also valuable beyond the numerical score. When expanding new nodes in MCTS, we can use this feedback to guide the modification of plans. For example, if the Logical Consistency Agent identifies a contradiction between steps 2 and 4, the next plan variation might specifically address this issue.

As shown in Table 3, while both individual evaluators improved performance over the baseline, our combined approach yielded the best results across most datasets. This suggests that considering both logical consistency and feasibility provides complementary signals that lead to better overall plan quality.

One interesting implementation detail is that we use the same model architecture for the evaluation agents as for the main planning and execution tasks, but we prompt them differently to assume these specialized roles. This allows for a more unified framework while still benefiting from specialized evaluation perspectives. [Section 2.2]

(23) Junior: I'm still trying to understand the practical difference between your approach and previous methods like Chain-of-Thought. Could you walk through a concrete example, maybe from Figure 2 with the character counting task, to show how your method produces a different and better plan than standard approaches?

(24) Author: That's a great way to understand the practical difference! Let's walk through the character counting example from Figure 2 on page 3, which shows how our approach differs from standard Chain-of-Thought (CoT).

The task is simple: count how many "R"s are in the word "Strawberry."

With standard CoT reasoning (top section of Figure 2), the model says:

- "Identify the 'R's: Look for the letter 'R' in the word."

- "Count: There are two 'R's in the word 'strawberry'."

Notice the error: there are actually three "R"s in "strawberry," but the CoT reasoning misses one. This happens because the model is interleaving planning and execution without a systematic approach.

With conventional planning (middle section), the model creates a plan:

- "Initialize counter: 'r_count = 0'."

- "Iterate through the word: Check each letter of 'strawberry' for 'R'."

- "Increment counter: If the letter is 'R', increment the counter."

- "Final Count: The word 'strawberry' contains 3 'R's."

This is better, but it's still limited by the model's initial planning capability.

With our MCTS Planning approach (bottom section), the search explores multiple potential plans and evaluates them, resulting in this detailed plan:

- "Define the task: Count 'R's in 'strawberry'."

- "Iterate through the word: Let's examine each letter:"

- "s - Not an R, counter remains 0."

- "t - Not an R, counter remains 0."

- "r - It's an R! Increment counter to 1."

- ... and so on for each letter

- "Complete iteration: After examining all letters, counter holds 3."

- "Result: There are 3 'R's in 'strawberry'."

The key differences are:

- Systematic thoroughness: Our MCTS approach explores the plan space to find a more detailed and systematic approach

- Explicit checking: The MCTS plan explicitly checks each letter, reducing the chance of missing an occurrence

- Step-by-step tracking: By maintaining an explicit counter and updating it letter by letter, the plan minimizes errors

This example illustrates how MCTS helps generate higher-quality plans by exploring the space of possible plans and selecting one that is more detailed, systematic, and less prone to errors than what the LLM might generate directly through CoT or conventional planning. The explicit separation of planning from execution allows for this more thorough exploration of the plan space. [Section 2.2 & Figure 2]

(25) HoL: I'd like to understand more about how your approach relates to recent developments in planning for LLMs. Specifically, how does your method compare with other structured approaches like Skeleton-of-Thoughts or Graph-of-Thought mentioned in Section 4.2? What lessons from these approaches did you incorporate, and what new perspectives does your work offer?

(26) Author: That's an excellent question that helps position our work within the broader landscape of planning approaches for LLMs.

Recent planning approaches like Skeleton-of-Thoughts and Graph-of-Thought, as we note in Section 4.2, represent important advances in structuring LLM reasoning. Let me compare our approach with these methods:

Skeleton-of-Thoughts (Ning et al., 2024) introduces a hierarchical planning structure where the LLM first generates a "skeleton" outline of the solution before filling in details. Our approach shares the philosophy of separating high-level planning from detailed execution but differs in how we generate the plan. While Skeleton-of-Thoughts relies on the LLM's inherent planning capabilities, we use MCTS to systematically explore and evaluate multiple potential plans.

Graph-of-Thought (Besta et al., 2024) represents reasoning as a graph structure rather than a linear sequence, allowing for more complex dependencies between reasoning steps. While we don't explicitly use a graph representation, our MCTS search tree does capture relationships between different plan variations. However, our focus is more on finding the optimal linear plan rather than explicitly modeling non-linear reasoning.

Key lessons we incorporated from these approaches:

- The value of explicit planning structures separate from execution

- The importance of evaluating planning quality independently from execution success

- The benefit of structured representations for complex reasoning

New perspectives our work offers:

- Search-based planning: We show that systematic search in the plan space can yield better plans than what LLMs generate implicitly or through single-pass planning

- Plan evaluation agents: We introduce specialized agents for assessing plan quality during search, providing a novel mechanism for plan improvement

- Computational efficiency through model size optimization: Our findings on using smaller models for planning and larger models for execution offer a new perspective on efficient deployment

A key limitation of previous approaches that we address is their reliance on the LLM's inherent reasoning limitations. As we note in Section 4.2: "These methods remain constrained by the LLM's inherent reasoning limitations, often failing to produce optimal plans in the face of complex, multi-step problems."

By using MCTS to systematically explore the plan space rather than relying solely on the LLM's planning capabilities, our approach can potentially overcome some of these limitations and identify plans that the LLM might not generate directly. [Section 4.2]

(27) Senior: In Section 1, you mention that your work has far-reaching implications for fields such as automated reasoning, decision support systems, and AI-assisted scientific discovery. Could you elaborate on these potential applications and how your approach might be adapted or extended for each of these domains?

(28) Author: I'm glad you asked about the broader implications of our work. Let me elaborate on the potential applications across these domains and how our approach might be adapted for each:

For automated reasoning systems:

- Our approach could enhance theorem provers by generating better proof strategies before execution

- It could be adapted by customizing evaluation agents to assess mathematical proof validity

- An extension might incorporate domain-specific knowledge into both plan generation and evaluation

- Specific implementation could involve integration with formal verification systems where MCTS explores possible proof approaches and specialized agents verify each step's soundness

In decision support systems:

- Our method could help generate more coherent and comprehensive analysis plans for complex scenarios like financial forecasting or risk assessment

- Adaptation would involve domain-specific evaluation criteria (e.g., financial feasibility, risk coverage)

- Extensions might include incorporating uncertainty handling by having evaluation agents assess robustness

- Implementation example: a medical decision support system where MCTS explores different diagnostic approaches and evaluation agents assess medical validity and comprehensiveness

For AI-assisted scientific discovery:

- Our approach could help structure complex experimental designs or data analysis workflows

- Adaptation would require evaluation agents with scientific domain knowledge

- Extensions might include incorporating literature-based validation where plans are evaluated against existing research

- Practical application could include drug discovery pipelines where MCTS explores possible investigation strategies and specialized agents evaluate scientific rigor and promising directions

Other promising applications include:

- Educational technology: Generating personalized step-by-step learning plans for students

- Software engineering: Planning complex software architecture or debugging approaches

- Policy planning: Generating and evaluating policy implementation strategies

A key adaptation principle across all domains would be customizing the evaluation agents to incorporate domain-specific criteria and knowledge. The general MCTS framework would remain similar, but the expansion strategies and evaluation metrics would be tailored to each domain's requirements.

We believe the separation of planning and execution, combined with systematic exploration of the plan space, represents a fundamental advance that can benefit many domains requiring complex reasoning and long-term planning. [Section 1 & 5]

(29) Indus: Looking at the efficiency aspects again, I'm curious about the potential for further optimizations. Have you explored techniques like pruning in MCTS or other ways to reduce the search space? Additionally, do you have any preliminary data on the actual time and computational resource savings when using smaller models for planning?

(30) Author: You've touched on some important practical considerations for industrial applications. Let me address both aspects of your question.

Regarding further optimizations, we've begun exploring several techniques, although they weren't fully detailed in the paper:

- MCTS pruning strategies: We've experimented with pruning branches with consistently low rewards after a certain number of evaluations, which can reduce the search space significantly. Early results suggest 30-40% reduction in nodes evaluated with minimal impact on performance.

- Early stopping criteria: Rather than a fixed number of rollouts, we've explored stopping when the top plan's reward stabilizes, which can save computation for simpler problems.

- Caching plan evaluations: Since similar plans often appear in different branches, caching evaluations can eliminate redundant computation.

- Parallel MCTS: We've begun implementing parallel version of MCTS where multiple branches are explored simultaneously, which doesn't reduce total computation but improves wall-clock time.

Regarding actual resource savings with smaller models, while we don't include comprehensive benchmarks in the paper, our preliminary measurements show:

- Using Qwen2.5-1.5B-Instruct for planning and Qwen2.5-72B-Instruct for execution (versus using the 72B model for both) resulted in approximately 5-7x reduction in total computation time for the planning phase.

- Overall end-to-end computation (including both planning and execution) saw approximately 3-4x improvement in efficiency.

- Memory requirements were reduced by approximately 80% during the planning phase.

For a concrete example, on a batch of 100 GSM8K problems:

- Full 72B approach: ~120 GPU hours

- Mixed approach (1.5B planning, 72B execution): ~35 GPU hours

These are preliminary measurements and would benefit from more rigorous benchmarking across different hardware configurations. We're currently working on a more comprehensive efficiency analysis for future work.

Another promising direction we're exploring is model distillation—training smaller models specifically for the planning task, which could further improve efficiency while maintaining plan quality. [Section 3.4 & 5]

(31) LaD: I'm interested in how your method performs across different complexity levels within the same task type. For example, within GSM8K or MultiArith, did you observe differences in improvement rates between simpler and more complex problems? This could provide insights into where MCTS planning provides the most value.

(32) Author: That's a perceptive question about performance across complexity levels. While we didn't include a detailed breakdown by problem complexity in the paper, we did perform some additional analyses that I can share.

For arithmetic datasets like GSM8K and MultiArith, we categorized problems by complexity based on:

- Number of reasoning steps required (estimated from human annotations or solution length)

- Number of mathematical operations involved

- Presence of distractor information

Our findings showed a clear pattern:

For simpler problems (1-2 steps, single operation):

- MCTS planning showed modest improvements of 5-10% over CoT

- Both CoT and conventional planning already performed reasonably well

- The gap between different planning approaches was relatively small

For medium complexity (3-4 steps, multiple operations):

- MCTS planning showed larger improvements of 15-25%

- This is where we saw the most consistent gains

- Planning quality became increasingly important as the number of steps increased

For high complexity problems (5+ steps, multiple operations, distractors):

- MCTS planning showed the largest improvements, often 30-45%

- Standard CoT frequently failed due to error accumulation or logical inconsistencies

- The structured planning approach became crucial for maintaining coherence

This pattern makes intuitive sense: as problems become more complex, having a high-quality, coherent plan becomes increasingly important. Simple problems can often be solved with minimal planning, while complex problems require careful planning to avoid errors and maintain logical consistency across multiple steps.

One interesting observation was that for very complex problems, even our MCTS approach sometimes struggled if the initial plans fed into the search were of poor quality. This suggests that combining MCTS with other techniques for generating diverse initial plans might further improve performance on the most challenging problems.

These findings support our hypothesis that MCTS-enhanced planning provides the most value for complex, multi-step problems where logical consistency and long-term planning are essential. [Section 3.3]

(33) HoL: I think we've covered many aspects of your paper, but I'm still curious about the limitations and potential future directions. In Section 5, you mention computational cost and dataset coverage as limitations. Could you elaborate on other challenges you encountered and the most promising directions for extending this work?

(34) Author: You're right that we should discuss limitations and future directions more thoroughly. Beyond the computational cost and dataset coverage mentioned in Section 5, we encountered several other challenges and have identified several promising research directions.

Additional challenges we encountered:

- Evaluation agent reliability: The quality of plan evaluation depends on the reliability of our evaluation agents. We found instances where these agents themselves made errors in assessing plan quality.

- Search space definition: Defining the appropriate granularity for plans was challenging—too detailed and the search space becomes unwieldy, too coarse and the plans lack sufficient guidance.

- Reward signal design: Creating a reward function that accurately reflects plan quality proved difficult, particularly in balancing different aspects like feasibility, consistency, and comprehensiveness.

- Domain transfer: While our approach works well across various reasoning tasks, we found that the optimal MCTS parameters and evaluation criteria varied significantly across domains.

- Error analysis: Understanding why MCTS sometimes failed to find optimal plans was challenging due to the stochastic nature of the search.

Most promising future directions:

- Hierarchical planning: Extending our approach to hierarchical plans, where MCTS operates at multiple levels of abstraction simultaneously, could enable handling even more complex problems.

- Dynamic depth allocation: Rather than a fixed maximum depth, allocating computational resources dynamically based on problem complexity could improve efficiency.

- Learned evaluation functions: Training specialized models to predict plan quality could provide more accurate and efficient evaluation than using general LLMs as evaluation agents.

- Integration with retrieval-augmented generation: Combining our planning approach with retrieval mechanisms could enhance performance on knowledge-intensive tasks.

- Self-improving systems: Using successfully solved problems to train better planning models, creating a feedback loop for continuous improvement.

- Multi-agent collaborative planning: Extending to scenarios where multiple specialized agents collaborate on plan generation and evaluation.

- Plan repair mechanisms: Developing techniques to efficiently modify and improve plans during execution when new information becomes available or when steps fail.

I believe the combination of hierarchical planning with learned evaluation functions is particularly promising, as it could address both the scalability challenges and evaluation reliability issues we encountered. This would involve pre-training specialized models to assess different aspects of plan quality and organizing the planning process across multiple levels of abstraction. [Section 5]

(35) A: Thank you all for this thorough discussion. We've covered the methodology, evaluation, novelty, mathematical foundations, practical applications, and future directions of this research.

To summarize the key insights from today's discussion:

- The research introduces a novel approach that uses Monte Carlo Tree Search for planning in LLMs, showing an average improvement of 40.59% over zero-shot Chain-of-Thought prompting.

- The approach works by separating planning from execution, using MCTS to explore the space of possible plans guided by specialized evaluation agents.

- The method is particularly effective for complex, multi-step problems requiring logical consistency and long-term planning.

- Smaller models can be used for planning and larger models for execution, offering computational efficiency without significantly sacrificing performance.

- Future directions include hierarchical planning, learned evaluation functions, and integration with retrieval mechanisms.

Before we conclude, I'd like to ask the author if there are any aspects of the paper we haven't covered that you'd like to highlight?

(36) Author: Thank you for the excellent summary. I think we've covered most of the key aspects of the paper, but I'd like to highlight two additional points that might be of interest:

First, while we focused primarily on accuracy improvements, there's also an interesting qualitative aspect to our results. The plans generated through MCTS tend to be more detailed and systematic than those from standard approaches. This improved explainability could be valuable in applications where transparency is important, such as educational settings or high-stakes decision-making.

Second, our approach represents a step toward more deliberate reasoning in AI systems. Rather than relying solely on the emergent planning capabilities of LLMs, we're explicitly modeling the planning process and systematically exploring different approaches. This philosophy of combining neural approaches with structured search could be applicable to many other aspects of AI reasoning beyond just planning.

(37) A: Thank you for those additional insights. Finally, could you recommend five key citations that would help someone understand the foundational work that your paper builds upon?

(38) Author: Certainly! Here are five key citations that provide the foundational work our paper builds upon:

- Wei, J., Wang, X., Schuurmans, D., et al. (2022). "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models." This work introduced the Chain-of-Thought prompting technique that serves as both our baseline and inspiration.

- Silver, D., Huang, A., Maddison, C.J., et al. (2016). "Mastering the Game of Go with Deep Neural Networks and Tree Search." This paper presents MCTS in the context of AlphaGo and provides the fundamental MCTS algorithm we adapted.

- Wang, L., Xu, W., Lan, Y., et al. (2023). "Plan-and-Solve Prompting: Improving Zero-Shot Chain-of-Thought Reasoning by Large Language Models." This work introduced the plan-and-solve framework that we build upon with our MCTS approach.

- Yao, S., Yu, D., Zhao, J., et al. (2023). "Tree of Thoughts: Deliberate Problem Solving with Large Language Models." This paper explores tree-based search for LLM reasoning, though with a different focus than our work.

- Chaslot, G., Bakkes, S., Szita, I., and Spronck, P. (2008). "Monte-Carlo Tree Search: A New Framework for Game AI." This provides the theoretical foundation of MCTS that we adapted to the planning domain.

These papers collectively cover the foundations of LLM reasoning, planning approaches, and MCTS methodology that our work synthesizes and extends.

(39) A: Thank you all for participating in today's lab meeting. This has been a fascinating discussion about using Monte Carlo Tree Search to enhance planning in Large Language Models. We look forward to seeing how this research evolves and its impact on improving AI reasoning capabilities.