Do LLMs Plan Like Human Writers? A Lab Discussion

Alexander Spangher (University of Southern California, Information Sciences Institute), Nanyun Peng (University of California, Los Angeles), Sebastian Gehrmann (Bloomberg), Mark Dredze (Bloomberg)

link: https://aclanthology.org/2024.emnlp-main.1216/

(1) A: Good morning everyone! Today we have a presentation from our colleague, who will be discussing their recent paper titled "Do LLMs Plan Like Human Writers? Comparing Journalist Coverage of Press Releases with LLMs." After the presentation, we'll open the floor for questions and discussion. Please, go ahead.

(2) Author: Thank you for the introduction. I'm excited to share our work with you today. Our paper explores how Large Language Models (LLMs) compare to human journalists when planning coverage of press releases.

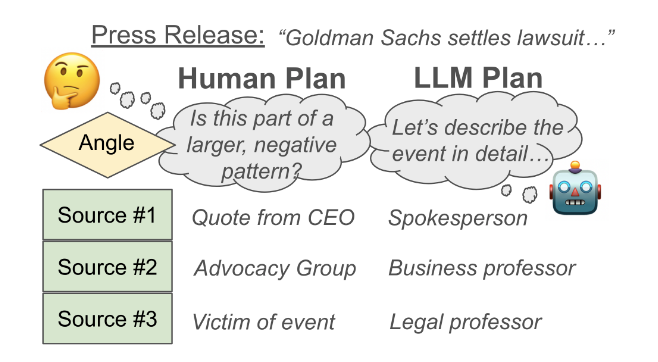

The main idea of our research stems from understanding that journalists engage in multiple creative steps when writing news, particularly exploring different "angles" – the specific perspectives a reporter takes when covering a story. While LLMs could potentially aid these creative processes, we wanted to carefully evaluate if such interventions align with human values and journalistic practices.

For our methodology, we assembled a large dataset of 250,000 press releases and 650,000 news articles covering them. This includes notable press releases like OpenAI's GPT-2 announcement and Meta's response to the Cambridge Analytica scandal. We developed methods to identify which news articles effectively challenge and contextualize press releases, rather than simply summarizing them.

We then compared planning decisions made by human journalists with suggestions generated by LLMs under various prompts designed to stimulate creative planning. Our evaluation focused on two key planning steps: formulating angles (specific focus areas) and selecting sources (people or documents contributing information).

Our results revealed three main findings:

First, human-written news articles that challenge and contextualize press releases more effectively tend to take more creative angles and use more informational sources. We found strong positive correlations between the criticality of coverage and both the creativity of angles (r = .29) and the number of sources used (r = .5).

Second, LLMs align better with humans when recommending angles compared to informational sources. When fine-tuned, GPT-3.5 reached an F1-score of 63.6 for recommending angles that humans actually took, but only 27.9 for recommending sources.

Third, and importantly, both the angles and sources that LLMs suggest are significantly less creative than those produced by human journalists. This gap persisted across zero-shot, few-shot, and even fine-tuned approaches.

We believe our work lays important groundwork for developing AI approaches that can aid creative tasks while ensuring they align with human values. Our study represents a generalizable benchmark in creative planning tasks and offers a template for evaluating creative planning going forward. [Section 1]

(3) HoL: Thank you for that clear overview. I'd like to focus first on your methodology, particularly how you identified news articles that "challenge and contextualize" press releases. Could you elaborate on this approach and explain how it differs from previous work in this area?

(4) Author: Great question. Our approach centers on what we call "contrastive summarization." We needed to identify when a news article effectively covers a press release by challenging and contextualizing it, not just summarizing.

We examined pairs of news articles and press releases, asking two key questions: Is this news article substantially about this press release? And does this news article challenge the information in the press release?

To measure this automatically, we developed a framework based on Natural Language Inference (NLI) relations. We represented each press release and news article as sequences of sentences, then established two criteria: 1) Does the news article contextualize the press release by referencing enough of its sentences? And 2) Does the news article challenge the press release by contradicting enough of its sentences?

We calculate sentence-level NLI relations between all press release and news article sentence pairs, then aggregate these scores to the document level. This approach is inspired by Laban et al. (2022), but differs by also considering contradiction relations, not just entailment.

To validate this approach, we manually annotated 1,100 pairs of articles and press releases. Our best model (a binned-MLP with coreference resolution) achieved 73.0 F1-score for detection of challenging coverage.

This differs from previous work in that we're specifically focused on how journalists transform press releases through critical coverage, rather than just measuring factual consistency or summarization quality. [Section 3]

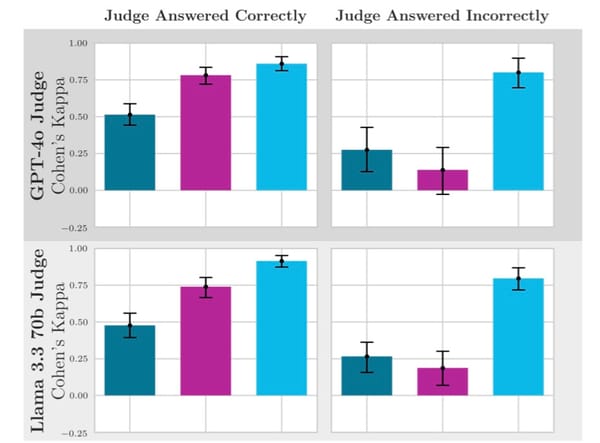

(5) Dr. P: Your evaluation approach interests me. You mentioned measuring the creativity of angles and sources, but creativity is notoriously difficult to quantify. Could you detail how you operationalized creativity in your evaluation? Also, what were the specific prompts you used with the LLMs, and did you experiment with different prompt designs?

(6) Author: You've touched on one of our most challenging tasks. To measure creativity, we developed a 5-point scale inspired by Nylund (2013), who studied journalistic ideation processes.

At the low end (scores 1-2), we classify "ingestion" or simplistic engagement with the press release, including surface-level rebuttals. In the middle (scores 3-4), we have "trend analysis" or bigger-picture rebuttals. At the high end (score 5), we identify novel, investigative directions that would require substantial journalistic work.

Two journalists as annotators evaluated the creativity of both human and LLM plans using this scale. We found that human journalists consistently scored higher on creativity, with the gap being significant across all LLM conditions.

Regarding prompts, we designed them to mimic a scenario where an LLM serves as a creative aide to a journalist. For angles, we asked the LLM to "de-spin" the press release, identify areas where it portrays events in an overly positive light, and suggest potential directions to pursue. Our source prompt was novel, asking the LLM to suggest types of sources (not specific individuals) that would help investigate the angles.

We tested three conditions: zero-shot (just the press release and definitions), few-shot (adding 6 examples of press release summaries and human-written plans), and fine-tuned (training GPT-3.5 on press releases paired with human plans). Fine-tuning yielded the best results, showing a 10-point increase in F1-scores for both angle and source recommendations. [Section 5.2]

(7) Senior: I'm particularly interested in the novelty of your work. While there's been research on how LLMs can assist with creative tasks, your focus on planning seems distinctive. How does your approach differ from previous attempts to use LLMs in journalism, and what specific contributions do you think are most innovative?

(8) Author: Our work builds on but significantly extends previous research in this area. A key reference point is Petridis et al. (2023), who explored how well LLMs could recommend unique angles to cover press releases. While their work was promising, it had limited generalizability due to a small sample size—they tested only two press releases with 12 participants.

Our main innovations include:

First, the scale and scope of our dataset. With 250,000 press releases and 650,000 articles spanning 10 years, our PressRelease corpus enables much more comprehensive analysis of journalistic practices than previously possible.

Second, our framing of effective press release coverage as "contrastive summarization." This novel framework helps identify journalism that not only conveys information but also challenges and contextualizes it. This is particularly valuable for understanding the creative decisions journalists make.

Third, our focus on planning stages rather than final output. Most work on LLMs and creativity evaluates the final creative product. We specifically examine the planning decisions that precede writing, which potentially have an outsized impact on creative output.

Fourth, our direct comparison of human and LLM planning capabilities on the same tasks using real-world examples. This provides a benchmark for creative planning that future work can build upon.

Finally, our detailed analysis of sources used in effective journalism, which reveals that more challenging coverage correlates with more resource-intensive sourcing practices. This dimension of planning hasn't been well-studied in the context of LLMs. [Section 8]

(9) LaD: I'd like to discuss your dataset in more detail. You mentioned collecting 250,000 press releases and 650,000 articles covering them. Could you elaborate on how you identified which articles were covering which press releases? And did you take any steps to ensure diversity in the dataset in terms of topics, sources, and news outlets?

(10) Author: We collected data through two complementary approaches to reduce potential biases.

In the first approach, "Press Releases ← News Outlets, Hyperlinks," we started with news articles and found hyperlinks to press releases within them. We queried Common Crawl for URLs from 9 major financial newspapers, yielding 114 million URLs. Using a supervised model to identify news article URLs, we found approximately 940,000 news articles. We then extracted hyperlinks to known press release websites, resulting in 247,372 articles covering 117,531 press releases.

In the second approach, "Press releases → News Articles, Backlinks," we started with press releases and found articles linking to them. We compiled subdomains for press release offices of S&P 500 companies and other organizations of interest (like OpenAI and SpaceX). Using a backlinking service, we discovered pages hyperlinking to these subdomains, yielding 587,464 news articles and 176,777 press releases.

Each approach has different biases: the first is limited to major financial newspapers and might overrepresent popular press releases; the second overrepresents top companies but includes a wider array of news outlets. By combining both methods, we attempted to minimize these biases.

For cleaning, we excluded pairs where the press release link appears in the bottom half of the article (suggesting it's not the main topic) and pairs published more than a month apart. We also excluded short sentences and non-article sentences.

Our final dataset includes diverse topics from corporate announcements to technological developments, though it is skewed toward financial and business news due to our data collection methods. About 28% of press releases (70,062) are covered by more than one news article, enabling future research on different coverage decisions for the same event. [Section 2.1]

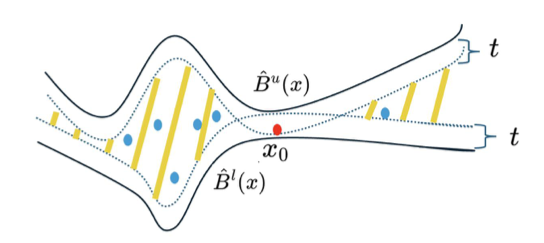

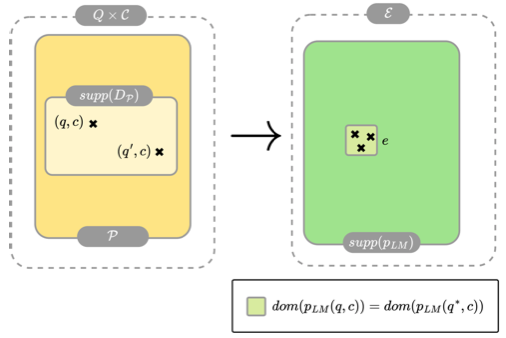

(11) MML: I'd like to focus on your theoretical framing of the problem. You describe press release coverage as "contrastive summarization" and use Natural Language Inference to detect when articles challenge press releases. Could you explain the mathematical formulation behind this approach? Specifically, how do you compute document-level NLI scores from sentence-level predictions, and what thresholds did you use to determine when an article effectively challenges a press release?

(12) Author: Let me walk through the mathematical framework. We represent each press release and news article as sequences of sentences, P⃗ = p₁, ..., pₙ and N⃗ = n₁, ..., nₘ respectively.

We establish two criteria for effective coverage:

- N⃗ contextualizes P⃗ if: ∑(j=1,...n) P(references|N⃗, pⱼ) > λ₁

- N⃗ challenges P⃗ if: ∑(j=1,...,n) P(contradicts|N⃗, pⱼ) > λ₂

Here, "references" equals 1 if any sentence in N⃗ references (entails or contradicts) pⱼ, and 0 otherwise. "Contradicts" is 1 if any sentence in N⃗ contradicts pⱼ.

To calculate document-level scores, we follow a process inspired by Laban et al. (2022):

- Calculate sentence-level NLI relations, p(y|pᵢ, nⱼ), between all P⃗ × N⃗ sentence pairs

- For each press release sentence pᵢ, average the top-kᵢₙₙₑᵣ relations, generating a pᵢ-level score

- Average the top-kₒᵤₜₑᵣ pᵢ-level scores to get the document-level score

Where kᵢₙₙₑᵣ represents how many times each press release sentence should be referenced before it's considered "covered," and kₒᵤₜₑᵣ is how many sentences need to be "covered" to consider the entire press release substantially covered.

Through experimentation, we found the optimal parameters after coreference resolution were:

- For detecting coverage: kₒᵤₜₑᵣ = 68 for entailment, kᵢₙₙₑᵣ = 5

- For detecting challenge: kₒᵤₜₑᵣ = 45 for contradiction, kᵢₙₙₑᵣ = 5

These parameters indicate that after resolving coreferences, about 5 news article sentences must entail or contradict a press release sentence for it to be meaningfully addressed, and about 45-68 sentences in the press release need to be addressed for the entire document to be considered covered or challenged.

This approach achieved an F1-score of 80.5 for detecting coverage and 73.0 for detecting challenge on our test set. [Section 3.1 and Appendix B]

(13) Indus: From an industry perspective, I'm curious about the practical applications of your findings. How mature is this work, and what are the potential use cases? Could this be implemented as a product to assist journalists, and what would be the efficiency and cost considerations? Also, do you see any risks in deploying such LLM-based planning tools in newsrooms?

(14) Author: Great questions about practical implications. In terms of maturity, our work is still primarily research-focused rather than production-ready, but it offers several insights that could inform product development.

The most immediate application would be LLM-based tools to assist journalists in the planning stages of covering press releases. Our findings suggest that such tools would be more effective at suggesting potential angles (with a 63.6 F1-score when fine-tuned) than at recommending sources (27.9 F1-score).

The efficiency gains could be substantial. Press releases often require significant cognitive effort to "de-spin" and contextualize properly. An LLM assistant could help journalists quickly identify potential angles, saving time in the initial planning phase. This is especially valuable given dwindling resources in newsrooms, as noted by Angelucci and Cagé (2019).

However, the cost considerations aren't trivial. Our best results came from fine-tuned models, which require considerable data and computational resources. The zero-shot and few-shot prompting approaches performed worse, suggesting that off-the-shelf solutions would be less effective without customization.

As for risks, there are several important considerations:

First, our findings show that LLM plans lack creativity compared to human journalists. Relying too heavily on LLM suggestions could potentially homogenize news coverage and reduce the investigative depth that human journalists bring.

Second, there's a risk of reinforcing existing biases in coverage patterns, especially if LLMs are trained on data that reflects status quo reporting rather than exemplary journalism.

Third, if journalists become overly reliant on LLM planning, there could be a de-skilling effect where journalists lose their ability to identify novel angles independently.

To mitigate these risks, we envision these tools as supplements to, rather than replacements for, journalistic planning. The ideal implementation would be a human-in-the-loop approach where LLMs suggest potential directions that journalists can build upon with their expertise. [Section 7]

(15) Junior: I'm still trying to understand some of the basic concepts. Could you explain more clearly what you mean by "angles" and "sources" in this context? And also, what exactly is NLI and how does it help measure whether an article challenges a press release?

(16) Author: Those are excellent clarifying questions.

An "angle" in journalism refers to the specific focus or perspective a reporter takes when covering a story. For example, when covering a company's press release about a new product, an angle could be "How does this product compare to competitors?" or "What privacy concerns might this raise?" The angle shapes the entire narrative and direction of the article.

"Sources" are the people or documents that contribute information to a news article. These could be quotes from experts, references to academic studies, court documents, or other news reports. In our study, we're particularly interested in the types of sources journalists use (e.g., "an employee at the company," "a regulatory expert") rather than specific named individuals.

Natural Language Inference (NLI) is a task in natural language processing that determines the relationship between two sentences: whether one sentence entails (logically follows from) another, contradicts it, or is neutral (neither entails nor contradicts).

In our paper, we use NLI to measure how news articles relate to press releases. We calculate two types of relationships:

- "References" - when the news article either entails or contradicts information in the press release (showing the article is addressing the press release content)

- "Contradicts" - when the news article directly challenges or refutes information in the press release

By aggregating these sentence-level NLI relations to the document level, we can identify articles that substantially cover a press release (high "references" score) and challenge it (high "contradicts" score). Our findings show that effective journalism does both - it addresses the content of the press release while also providing critical perspective.

Think of it this way: if an article completely entails a press release, it's basically just repeating the same information. If it completely contradicts it, it might be off-topic. The best journalism finds a balance - covering the press release while also challenging and contextualizing it. [Sections 1 and 3]

(17) A: Thank you all for your insightful questions. Before we wrap up, I'd like to summarize the key insights from our discussion:

- This research establishes a novel framework for evaluating how LLMs compare to human journalists in planning news coverage of press releases.

- The study found that while LLMs can suggest angles that align reasonably well with human journalists (especially when fine-tuned), they struggle with recommending appropriate sources and generally produce less creative plans than humans.

- The researchers developed a new concept of "contrastive summarization" to identify effective press release coverage, finding that articles that both address and challenge press releases tend to use more creative angles and diverse sources.

- The methodology combines NLI-based detection of challenge and contextualization with human evaluation of creativity, providing both quantitative and qualitative perspectives.

- The practical applications include potential tools to assist journalists in the planning stages, though such tools would need to complement rather than replace human creativity.

Author, are there any aspects of your work that we haven't covered that you'd like to highlight? Also, could you share the five most important citations for understanding the foundational work that your paper builds upon?

(18) Author: Thank you for that excellent summary. One aspect we haven't discussed in depth is the future direction of this research. We see several promising avenues:

First, creativity-enhancing techniques could potentially narrow the gap between LLMs and humans. Chain-of-thought-style prompts that explicitly include creative planning steps or multi-LLM approaches might improve creativity.

Second, retrieval-oriented grounding could address LLMs' lack of awareness of prior events, which limited their planning capabilities in our study. Retrieval-augmented generation and tool-based approaches might yield improvement here.

Third, we noted that approximately 28% of press releases in our dataset are covered by multiple news articles. This presents an opportunity for future work analyzing different possible coverage decisions for the same source material.

As for the five most important citations:

- Petridis et al. (2023) - "AngleKindling: Supporting Journalistic Angle Ideation with Large Language Models" - Introduced the concept of using LLMs to suggest angles for press release coverage, though with a much smaller sample.

- Laban et al. (2022) - "SummaC: Re-visiting NLI-based Models for Inconsistency Detection in Summarization" - Provided the methodological foundation for our document-level NLI approach.

- Maat and de Jong (2013) - "How Newspaper Journalists Reframe Product Press Release Information" - Offered a theoretical framework for understanding how journalists transform press release information.

- Spangher et al. (2023) - "Identifying Informational Sources in News Articles" - Developed methods to identify the sources mentioned in news articles that we built upon.

- Tian et al. (2024) - "Are Large Language Models Capable of Generating Human-Level Narratives?" - Represents emerging templates for comparing LLM creativity with human creativity in planning tasks.

These works collectively frame our understanding of journalistic processes, creative planning, and the potential role of LLMs in assisting creative tasks. [Sections 7 and 9]

(19) HoL: Thank you for this thorough discussion. Before we close, I'd like to return to methodology once more. You mentioned correlations between the criticality of coverage and both the creativity of angles (r = .29) and the number of sources (r = .5). Could you discuss the limitations of these correlations and what they tell us about the causal relationship between these factors?

(20) Author: That's an important point to address. The correlations we found between criticality, creativity, and source usage have several limitations and should be interpreted cautiously.

First, correlation does not imply causation. While we found that more critical articles tend to use more sources and creative angles, we cannot definitively claim that one causes the other. It's possible that journalists who decide to write critical articles also happen to use more sources and creative angles, or that some third factor influences all three variables.

Second, the moderate correlation values (r = .29 for angle creativity and r = .5 for source count) indicate substantial unexplained variance. Other factors clearly influence journalistic decisions beyond those we measured.

Third, our correlational analysis doesn't account for the complex professional dynamics in newsrooms. Decisions about angles and sources are influenced by editorial policies, time constraints, access to sources, and prior relationships – factors our dataset doesn't capture.

Fourth, there may be selection bias in which press releases get critical coverage in the first place. Perhaps only certain types of press releases (e.g., from controversial companies or about sensitive topics) receive critical treatment, and these might naturally lend themselves to more creative angles and diverse sourcing.

As for what these correlations do tell us: they suggest that effective journalism involves a constellation of related practices. The stronger correlation with source count (r = .5) compared to angle creativity (r = .29) suggests that sourcing practices may be more tightly linked to critical coverage than angle selection.

This aligns with journalistic principles where multiple perspectives and evidence-based reporting are central to challenging official narratives. The finding that certain source types (person-derived quotes, r = .38) correlate more strongly with contradiction reinforces the importance of direct human sources in critical reporting.

These insights helped shape our experimental design for evaluating LLMs, focusing on both angle and source suggestions as key planning steps. [Section 4]

(21) A: Thank you all for your contributions to this discussion. We've covered the paper's methodology, findings, theoretical framework, practical applications, and future directions in depth. This has been an excellent exploration of how LLMs compare to human journalists in planning news coverage and the challenges in aligning AI assistance with human creative processes.